Hard disk drive

A hard disk drive (HDD), hard disk, hard driveor fixed disk[b] is a data storage device that uses magnetic storage to store and retrievedigital information using one or more rigid rapidly rotating disks (platters) coated with magnetic material. The platters are paired with magnetic heads, usually arranged on a moving actuator arm, which read and write data to the platter surfaces.[2] Data is accessed in a random-access manner, meaning that individual blocks of data can be stored or retrieved in any order and not onlysequentially. HDDs are a type of non-volatile storage, retaining stored data even when powered off.[3][4][5]

An overview of how HDDs work

Introduced by IBM in 1956,[6] HDDs became the dominant secondary storage device forgeneral-purpose computers by the early 1960s. Continuously improved, HDDs have maintained this position into the modern era of servers and personal computers. More than 200 companies have produced HDDs historically, though after extensive industry consolidation most current units are manufactured by Seagate, Toshiba, andWestern Digital. HDD unit shipments and sales revenues are declining, though production (exabytes per year) is growing.Flash memory has a growing share of the market for secondary storage, in the form ofsolid-state drives (SSDs). SSDs have higher data-transfer rates, higher areal storage density, better reliability,[7] and much lower latency and access times.[8][9][10][11] Though SSDs have higher cost per bit, they are replacing HDDs where speed, power consumption, small size, and durability are important.[10][11]

The primary characteristics of an HDD are its capacity and performance. Capacity is specified in unit prefixes corresponding to powers of 1000: a 1-terabyte (TB) drive has a capacity of 1,000 gigabytes (GB; where 1 gigabyte = 1 billion bytes). Typically, some of an HDD's capacity is unavailable to the user because it is used by the file system and the computer operating system, and possibly inbuilt redundancy for error correction and recovery. Performance is specified by the time required to move the heads to a track or cylinder (average access time) plus the time it takes for the desired sector to move under the head (average latency, which is a function of the physical rotational speed in revolutions per minute), and finally the speed at which the data is transmitted (data rate).

The two most common form factors for modern HDDs are 3.5-inch, for desktop computers, and 2.5-inch, primarily for laptops. HDDs are connected to systems by standardinterface cables such as PATA (Parallel ATA),SATA (Serial ATA), USB or SAS (Serial Attached SCSI) cables.

History

Video of modern HDD operation (cover removed)

The hard disk drive was initially developed as data storage for the IBM 305 RAMACcomputer system. [27], IBM announced HDDs in 1956 as a component of the IBM 305 RAMAC system and as a new component to enhance the existing IBM 650 system, a general-purpose mainframe. The first IBM drive, the 350 RAMAC in 1956, was approximately the size of two medium-sized refrigerators and stored five million six-bit characters (3.75 megabytes)[12] on a stack of 50 disks.[28]

In 1962 the IBM 350 was superseded by the IBM 1301 disk storage unit,[29] which consisted of 50 platters, each about 1/8-inch thick and 24 inches in diameter.[30] While the IBM 350 used only two read/write heads [28], the 1301 used an array of heads, one per platter, moving as a single unit. Cylinder-moderead/write operations were supported, and the heads flew about 250 micro-inches (about 6 µm) above the platter surface. Motion of the head array depended upon a binary adder system of hydraulic actuators which assured repeatable positioning. The 1301 cabinet was about the size of three home refrigerators placed side by side, storing the equivalent of about 21 million eight-bit bytes. Access time was about a quarter of a second.

Also in 1962, IBM introduced the model 1311disk drive, which was about the size of a washing machine and stored two million characters on a removable disk pack. Users could buy additional packs and interchange them as needed, much like reels of magnetic tape. Later models of removable pack drives, from IBM and others, became the norm in most computer installations and reached capacities of 300 megabytes by the early 1980s. Non-removable HDDs were called "fixed disk" drives.

Some high-performance HDDs were manufactured with one head per track (e.g.IBM 2305 in 1970) so that no time was lost physically moving the heads to a track.[31]Known as fixed-head or head-per-track disk drives they were very expensive and are no longer in production.[32]

In 1973, IBM introduced a new type of HDD code-named "Winchester". Its primary distinguishing feature was that the disk heads were not withdrawn completely from the stack of disk platters when the drive was powered down. Instead, the heads were allowed to "land" on a special area of the disk surface upon spin-down, "taking off" again when the disk was later powered on. This greatly reduced the cost of the head actuator mechanism, but precluded removing just the disks from the drive as was done with the disk packs of the day. Instead, the first models of "Winchester technology" drives featured a removable disk module, which included both the disk pack and the head assembly, leaving the actuator motor in the drive upon removal. Later "Winchester" drives abandoned the removable media concept and returned to non-removable platters.

Like the first removable pack drive, the first "Winchester" drives used platters 14 inches (360 mm) in diameter. A few years later, designers were exploring the possibility that physically smaller platters might offer advantages. Drives with non-removable eight-inch platters appeared, and then drives that used a 51⁄4 in (130 mm) form factor (a mounting width equivalent to that used by contemporary floppy disk drives). The latter were primarily intended for the then-fledgling personal computer (PC) market.

As the 1980s began, HDDs were a rare and very expensive additional feature in PCs, but by the late 1980s their cost had been reduced to the point where they were standard on all but the cheapest computers.

Most HDDs in the early 1980s were sold to PC end users as an external, add-on subsystem. The subsystem was not sold under the drive manufacturer's name but under the subsystem manufacturer's name such asCorvus Systems and Tallgrass Technologies, or under the PC system manufacturer's name such as the Apple ProFile. The IBM PC/XT in 1983 included an internal 10 MB HDD, and soon thereafter internal HDDs proliferated on personal computers.

External HDDs remained popular for much longer on the Apple Macintosh. Many Macintosh computers made between 1986 and 1998 featured a SCSI port on the back, making external expansion simple. Older compact Macintosh computers did not have user-accessible hard drive bays (indeed, theMacintosh 128K, Macintosh 512K, andMacintosh Plus did not feature a hard drive bay at all), so on those models external SCSI disks were the only reasonable option for expanding upon any internal storage.

The 2011 Thailand floods damaged the manufacturing plants and impacted hard disk drive cost adversely between 2011 and 2013.[33]

Driven by ever increasing areal density since their invention, HDDs have continuously improved their characteristics; a few highlights are listed in the table above. At the same time, market application expanded frommainframe computers of the late 1950s to most mass storage applications including computers and consumer applications such as storage of entertainment content.

Technology

Magnetic recording

A modern HDD records data by magnetizing a thin film of ferromagnetic material[e] on a disk. Sequential changes in the direction of magnetization represent binary data bits. The data is read from the disk by detecting the transitions in magnetization. User data is encoded using an encoding scheme, such asrun-length limited encoding,[f] which determines how the data is represented by the magnetic transitions.

A typical HDD design consists of a spindlethat holds flat circular disks, also calledplatters, which hold the recorded data. The platters are made from a non-magnetic material, usually aluminum alloy, glass, or ceramic, and are coated with a shallow layer of magnetic material typically 10–20 nm in depth, with an outer layer of carbon for protection.[35][36][37] For reference, a standard piece of copy paper is 0.07–0.18 millimeters (70,000–180,000 nm).[38]

Recording of single magnetisations of bits on a 200 MB HDD-platter (recording made visible using CMOS-MagView).[39]

Longitudinal recording (standard) &perpendicular recording diagram

The platters in contemporary HDDs are spun at speeds varying from 4,200 rpm in energy-efficient portable devices, to 15,000 rpm for high-performance servers.[40] The first HDDs spun at 1,200 rpm[6] and, for many years, 3,600 rpm was the norm.[41] As of December 2013, the platters in most consumer-grade HDDs spin at either 5,400 rpm or 7,200 rpm.[42]

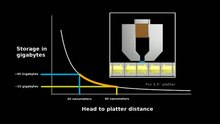

Information is written to and read from a platter as it rotates past devices called read-and-write heads that are positioned to operate very close to the magnetic surface, with theirflying height often in the range of tens of nanometers. The read-and-write head is used to detect and modify the magnetization of the material passing immediately under it.

In modern drives, there is one head for each magnetic platter surface on the spindle, mounted on a common arm. An actuator arm (or access arm) moves the heads on an arc (roughly radially) across the platters as they spin, allowing each head to access almost the entire surface of the platter as it spins. The arm is moved using a voice coil actuator or in some older designs a stepper motor. Early hard disk drives wrote data at some constant bits per second, resulting in all tracks having the same amount of data per track but modern drives (since the 1990s) use zone bit recording – increasing the write speed from inner to outer zone and thereby storing more data per track in the outer zones.

In modern drives, the small size of the magnetic regions creates the danger that their magnetic state might be lost because ofthermal effects, thermally induced magnetic instability which is commonly known as the "superparamagnetic limit". To counter this, the platters are coated with two parallel magnetic layers, separated by a 3-atom layer of the non-magnetic element ruthenium, and the two layers are magnetized in opposite orientation, thus reinforcing each other.[43]Another technology used to overcome thermal effects to allow greater recording densities is perpendicular recording, first shipped in 2005,[44] and as of 2007 the technology was used in many HDDs.[45][46][47]

In 2004, a new concept was introduced to allow further increase of the data density in magnetic recording, using recording media consisting of coupled soft and hard magnetic layers. That so-called exchange spring media, also known as exchange coupled composite media, allows good writability due to the write-assist nature of the soft layer. However, the thermal stability is determined only by the hardest layer and not influenced by the soft layer.[48][49]

Components

A typical HDD has two electric motors; a spindle motor that spins the disks and an actuator (motor) that positions the read/write head assembly across the spinning disks. The disk motor has an external rotor attached to the disks; the stator windings are fixed in place. Opposite the actuator at the end of the head support arm is the read-write head; thin printed-circuit cables connect the read-write heads to amplifier electronics mounted at the pivot of the actuator. The head support arm is very light, but also stiff; in modern drives, acceleration at the head reaches 550 g.

Close-up of a single read-write head, showing the side facing the platter

The actuator is a permanent magnet andmoving coil motor that swings the heads to the desired position. A metal plate supports a squat neodymium-iron-boron (NIB) high-fluxmagnet. Beneath this plate is the moving coil, often referred to as the voice coil by analogy to the coil in loudspeakers, which is attached to the actuator hub, and beneath that is a second NIB magnet, mounted on the bottom plate of the motor (some drives have only one magnet).

The voice coil itself is shaped rather like an arrowhead, and made of doubly coated copper magnet wire. The inner layer is insulation, and the outer is thermoplastic, which bonds the coil together after it is wound on a form, making it self-supporting. The portions of the coil along the two sides of the arrowhead (which point to the actuator bearing center) then interact with themagnetic field of the fixed magnet. Current flowing radially outward along one side of the arrowhead and radially inward on the other produces the tangential force. If the magnetic field were uniform, each side would generate opposing forces that would cancel each other out. Therefore, the surface of the magnet is half north pole and half south pole, with the radial dividing line in the middle, causing the two sides of the coil to see opposite magnetic fields and produce forces that add instead of canceling. Currents along the top and bottom of the coil produce radial forces that do not rotate the head.

The HDD's electronics control the movement of the actuator and the rotation of the disk, and perform reads and writes on demand from the disk controller. Feedback of the drive electronics is accomplished by means of special segments of the disk dedicated toservo feedback. These are either complete concentric circles (in the case of dedicated servo technology), or segments interspersed with real data (in the case of embedded servo technology). The servo feedback optimizes the signal to noise ratio of the GMR sensors by adjusting the voice-coil of the actuated arm. The spinning of the disk also uses a servo motor. Modern disk firmware is capable of scheduling reads and writes efficiently on the platter surfaces and remapping sectors of the media which have failed.

Error rates and handling

Modern drives make extensive use of error correction codes (ECCs), particularly Reed–Solomon error correction. These techniques store extra bits, determined by mathematical formulas, for each block of data; the extra bits allow many errors to be corrected invisibly. The extra bits themselves take up space on the HDD, but allow higher recording densities to be employed without causing uncorrectable errors, resulting in much larger storage capacity.[50] For example, a typical 1 TB hard disk with 512-byte sectors provides additional capacity of about 93 GB for theECC data.[51]

In the newest drives, as of 2009,[52] low-density parity-check codes (LDPC) were supplanting Reed–Solomon; LDPC codes enable performance close to the Shannon Limit and thus provide the highest storage density available.[52][53]

Typical hard disk drives attempt to "remap" the data in a physical sector that is failing to a spare physical sector provided by the drive's "spare sector pool" (also called "reserve pool"),[54] while relying on the ECC to recover stored data while the number of errors in a bad sector is still low enough. The S.M.A.R.T(Self-Monitoring, Analysis and Reporting Technology) feature counts the total number of errors in the entire HDD fixed by ECC (although not on all hard drives as the related S.M.A.R.T attributes "Hardware ECC Recovered" and "Soft ECC Correction" are not consistently supported), and the total number of performed sector remappings, as the occurrence of many such errors may predict an HDD failure.

The "No-ID Format", developed by IBM in the mid-1990s, contains information about which sectors are bad and where remapped sectors have been located.[55]

Only a tiny fraction of the detected errors ends up as not correctable. For example, specification for an enterprise SAS disk (a model from 2013) estimates this fraction to be one uncorrected error in every 1016 bits,[56]and another SAS enterprise disk from 2013 specifies similar error rates.[57] Another modern (as of 2013) enterprise SATA disk specifies an error rate of less than 10 non-recoverable read errors in every 1016bits.[58][needs update?] An enterprise disk with aFibre Channel interface, which uses 520 byte sectors to support the Data Integrity Fieldstandard to combat data corruption, specifies similar error rates in 2005.[59]

The worst type of errors are silent data corruptions which are errors undetected by the disk firmware or the host operating system; some of these errors may be caused by hard disk drive malfunctions.[60]

Development

The rate of areal density advancement was similar to Moore's law (doubling every two years) through 2010: 60% per year during 1988–1996, 100% during 1996–2003 and 30% during 2003–2010.[61] Gordon Moore (1997) called the increase "flabbergasting,"[62] while observing later that growth cannot continue forever.[63] Price improvement decelerated to −12% per year during 2010–2017,[64] as the growth of areal density slowed. The rate of advancement for areal density slowed to 10% per year during 2010–2016,[65] and there was difficulty in migrating from perpendicular recording to newer technologies.[66]

As bit cell size decreases, more data can be put onto a single drive platter. In 2013, a production desktop 3 TB HDD (with four platters) would have had an areal density of about 500 Gbit/in2 which would have amounted to a bit cell comprising about 18 magnetic grains (11 by 1.6 grains).[67] Since the mid-2000s areal density progress has increasingly been challenged by asuperparamagnetic trilemma involving grain size, grain magnetic strength and ability of the head to write.[68] In order to maintain acceptable signal to noise smaller grains are required; smaller grains may self-reverse (electrothermal instability) unless their magnetic strength is increased, but known write head materials are unable to generate a magnetic field sufficient to write the medium. Several new magnetic storage technologies are being developed to overcome or at least abate this trilemma and thereby maintain the competitiveness of HDDs with respect to products such as flash memory-based solid-state drives (SSDs).

In 2013, Seagate introduced one such technology, shingled magnetic recording(SMR).[69] Additionally, SMR comes with design complexities that may cause reduced write performance.[70][71] Other new recording technologies that, as of 2016, still remain under development include heat-assisted magnetic recording (HAMR),[72][73] microwave-assisted magnetic recording (MAMR),[74][75]two-dimensional magnetic recording (TDMR),[67][76] bit-patterned recording(BPR),[77] and "current perpendicular to plane"giant magnetoresistance (CPP/GMR) heads.[78][79][80]

The rate of areal density growth has dropped below the historical Moore's law rate of 40% per year, and the deceleration is expected to persist through at least 2020. Depending upon assumptions on feasibility and timing of these technologies, the median forecast by industry observers and analysts for 2020 and beyond for areal density growth is 20% per year with a range of 10–30%.[81][82][83][84] The achievable limit for the HAMR technology in combination with BPR and SMR may be 10 Tbit/in2,[85]which would be 20 times higher than the 500 Gbit/in2 represented by 2013 production desktop HDDs. As of 2015, HAMR HDDs have been delayed several years, and are expected in 2018. They require a different architecture, with redesigned media and read/write heads, new lasers, and new near-field optical transducers.[86]

Capacity

The capacity of a hard disk drive, as reported by an operating system to the end user, is smaller than the amount stated by the manufacturer for several reasons: the operating system using some space, use of some space for data redundancy, and space use for file system structures. Also the difference in capacity reported in SI decimal prefixed units vs. binary prefixes can lead to a false impression of missing capacity.

Calculation

Modern hard disk drives appear to their host controller as a contiguous set of logical blocks, and the gross drive capacity is calculated by multiplying the number of blocks by the block size. This information is available from the manufacturer's product specification, and from the drive itself through use of operating system functions that invoke low-level drive commands.[87][88]

The gross capacity of older HDDs is calculated as the product of the number ofcylinders per recording zone, the number of bytes per sector (most commonly 512), and the count of zones of the drive.[citation needed]Some modern SATA drives also reportcylinder-head-sector (CHS) capacities, but these are not physical parameters because the reported values are constrained by historic operating system interfaces. The C/H/S scheme has been replaced by logical block addressing (LBA), a simple linear addressing scheme that locates blocks by an integer index, which starts at LBA 0 for the first block and increments thereafter.[89] When using the C/H/S method to describe modern large drives, the number of heads is often set to 64, although a typical hard disk drive, as of 2013, has between one and four platters.

In modern HDDs, spare capacity for defect management is not included in the published capacity; however, in many early HDDs a certain number of sectors were reserved as spares, thereby reducing the capacity available to the operating system.

For RAID subsystems, data integrity and fault-tolerance requirements also reduce the realized capacity. For example, a RAID 1 array has about half the total capacity as a result of data mirroring, while a RAID 5 array with xdrives loses 1/x of capacity (which equals to the capacity of a single drive) due to storing parity information. RAID subsystems are multiple drives that appear to be one drive or more drives to the user, but provide fault tolerance. Most RAID vendors use checksumsto improve data integrity at the block level. Some vendors design systems using HDDs with sectors of 520 bytes to contain 512 bytes of user data and eight checksum bytes, or by using separate 512-byte sectors for the checksum data.[90]

Some systems may use hidden partitions for system recovery, reducing the capacity available to the end user.

Formatting

Data is stored on a hard drive in a series of logical blocks. Each block is delimited by markers identifying its start and end, error detecting and correcting information, and space between blocks to allow for minor timing variations. These blocks often contained 512 bytes of usable data, but other sizes have been used. As drive density increased, an initiative known as Advanced Format extended the block size to 4096 bytes of usable data, with a resulting significant reduction in the amount of disk space used for block headers, error checking data, and spacing.

The process of initializing these logical blocks on the physical disk platters is called low-level formatting, which is usually performed at the factory and is not normally changed in the field.[91] High-level formatting writes data structures used by the operating system to organize data files on the disk. This includes writing partition and file system structures into selected logical blocks. For example, some of the disk space will be used to hold a directory of disk file names and a list of logical blocks associated with a particular file.

Examples of partition mapping scheme include Master boot record (MBR) and GUID Partition Table (GPT). Examples of data structures stored on disk to retrieve files include the File Allocation Table (FAT) in theDOS file system and inodes in many UNIX file systems, as well as other operating system data structures (also known as metadata). As a consequence, not all the space on an HDD is available for user files, but this system overhead is usually small compared with user data.

Units

The total capacity of HDDs is given by manufacturers using SI decimal prefixes such as gigabytes (1 GB = 1,000,000,000 bytes) andterabytes (1 TB = 1,000,000,000,000 bytes).[92]This practice dates back to the early days of computing;[94] by the 1970s, "million", "mega" and "M" were consistently used in the decimal sense for drive capacity.[95][96][97] However, capacities of memory are quoted using abinary interpretation of the prefixes, i.e. using powers of 1024 instead of 1000.

Software reports hard disk drive or memory capacity in different forms using either decimal or binary prefixes. The Microsoft Windows family of operating systems uses the binary convention when reporting storage capacity, so an HDD offered by its manufacturer as a 1 TB drive is reported by these operating systems as a 931 GB HDD.Mac OS X 10.6 ("Snow Leopard") uses decimal convention when reporting HDD capacity.[98] The default behavior of the dfcommand-line utility on Linux is to report the HDD capacity as a number of 1024-byte units.[99]

The difference between the decimal and binary prefix interpretation caused some consumer confusion and led to class action suits against HDD manufacturers. The plaintiffs argued that the use of decimal prefixes effectively misled consumers while the defendants denied any wrongdoing or liability, asserting that their marketing and advertising complied in all respects with the law and that no class member sustained any damages or injuries.[100][101][102]

Price evolution

HDD price per byte improved at the rate of −40% per year during 1988–1996, −51% per year during 1996–2003, and −34% per year during 2003–2010.[20][61] The price improvement decelerated to −13% per year during 2011–2014, as areal density increase slowed and the 2011 Thailand floodsdamaged manufacturing facilities.[66]

Comments

Post a Comment